So today, I’ve got something that’s just gonna blow your mind.

Google is gearing up to completely revolutionize the industry with this new AI they’ve been working on. And it goes by the name of Gemini. It’s seriously next-level stuff. Riveling chat GPT and Mighty GPT4 in terms of understanding and generating natural language. Trust me, you’re not gonna wanna miss out on this one, so make sure you stick around till the end of the blog.

Now, what’s Gemini all about?

Well, this is Google’s latest project in the world of large language models. The full form is generalized multimodal intelligence network. And it’s basically this mega-powerful AI system that can handle multiple types of data and tasks all at once.

We’re talking text, images, audio, video, even 3D models and graphs. And the tasks like question answering, summarization, translation, captioning, sentiment analysis, and so on. But here’s the deal. Gemini isn’t just one single model. It’s an entire network of models. All working together to deliver the best results possible.

Alright, now how Gemini works?

So basically, Gemini uses a brand new architecture that merges two main components. A multimodal encoder and a multimodal decoder. The encoder’s job is to convert different types of data into a common language that the decoder can understand. Then the decoder takes over. Generating outputs in different modalities based on the encoded inputs and the task at hand. Say for instance, the input is an image. And the task is to generate a caption. The encoder would turn the image into a vector that captures all its features and meaning. And the decoder would then generate a text output that describes the image.

Now what sets Gemini apart and makes it special is that Gemini has several advantages when compared to other large language models like GPT4. First off, it is just more adaptable. It can handle any type of data and task without needing specialized models or any sort of fine tuning. Plus, it can learn from any domain and data set without being boxed in by predefined categories or labels. So compared to other models that are trained on specific domains or tasks, Gemini can tackle new and unseen scenarios much more efficiently. Then, there’s the fact that Gemini is just more efficient in general. It uses fewer computational resources and memory than other models that need to deal with multiple modalities separately. Also, it uses a distributed training strategy, which means it can make the most out of multiple devices and servers to speed up the learning process. And honestly, the best part is that Gemini can scale up to larger data sets and models without compromising its performance or quality, which is pretty impressive if you ask me.

If we talk about size and complexity, one of the most common things people look at to measure a large language model is its parameter count, right?

So basically, parameters are numerical variables that serve as the learned knowledge of the model, enabling it to make predictions and generate text based on the input it receives. Generally speaking, more parameters means more potential for learning and generating diverse and accurate outputs. But having more parameters also means you need more computational resources and memory to train and use the model. Now, GPT-4 has one trillion parameters, which is about six times bigger than GPT-3.5 with its 175 billion parameters. That makes GPT-4 one of the biggest language models ever made.

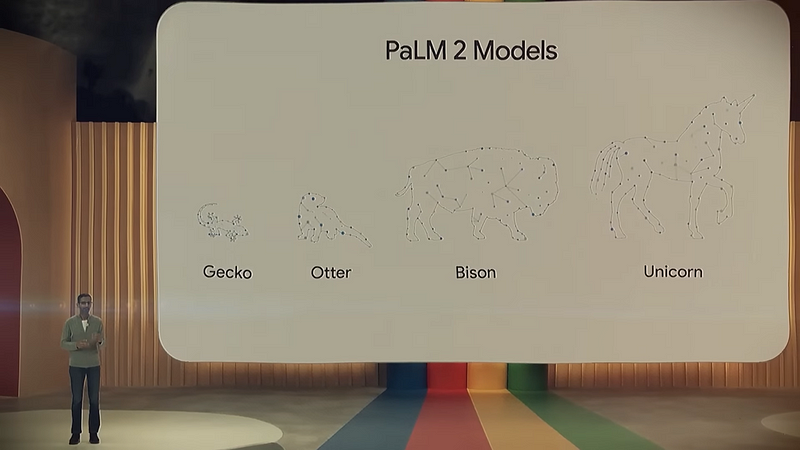

For Gemini, Google has said that it comes in four sizes, Gekko, Otter, Bison, and Unicorn. They haven’t given us the exact parameter count for each size, but based on some hints, we can guess that Unicorn is the largest and probably similar to GPT-4 in terms of parameters, maybe a bit less. Oh, and by the way, I gotta mention this before I show you few examples of what it can do. I must say that Gemini is more interactive and creative than other LLMs. It can churn out outputs in different modalities based on what the user prefers, and it can even generate novel and diverse outputs that aren’t bound by existing data or templates. For example, Gemini could whip up original images or videos based on text descriptions or sketches. It could also create stories or poems based on images or audio clips. Now, let’s talk about how does it not exactly outsmart, but perform tasks that are more varied and longer than GPT-4. All right, let me give you a few examples. One thing Gemini can do is multi-modal question answering.

This is when you ask a question that involves multiple types of data, like text and images. For instance, you might ask, who is the author of this book, while showing an image of a book cover, or perhaps what is the name of this animal, while showing an image of some creature. Gemini can answer these questions by combining its skills in understanding both text and visuals. Another cool thing it can do is multi-modal summarization. Imagine you’ve got a piece of information that’s made up of different types of data, like text and audio.

For example, you might want to summarize a podcast episode or a news article by generating a short text summary or an audio summary. Gemini can do all that by putting together its skills in textual and auditory comprehension. A third thing is multi-modal translation.

This is when you need to translate a piece of information that involves multiple types of data, like text and video. Suppose you have a video lecture or a movie trailer that you need to generate subtitles for or dub in another language. Gemini can pull that off by combining its skills in textual and visual translation. And then there’s multi-modal generation. This is when you want to generate a piece of information that involves multiple types of data, like text and images.

For example, you might want to generate an image based on a text description or a sketch, or maybe you want to generate a text based on an image or a video clip. Again, Gemini can do this by combining its skills in textual and visual generation. But to me, honestly, the most impressive thing that Gemini can perform is multi-modal reasoning, which basically means it can combine information from different data types and tasks to make assumptions.

For example, let’s say you show it a clip from a movie. And using the multi-modal reasoning, Gemini can now answer complex questions like, what is the main theme of this movie?

By synthesizing information from multiple modalities. So, it allows Gemini to notice patterns that happen again and again. Understand how characters interact with each other and find hidden messages or meanings in a movie.

By doing all of this, Gemini can give you a complete understanding of what the movie is really about and what its main idea or message is. And honestly, I’m seriously blown away by that. So, these are just a couple of things Gemini can do. There’s a ton more potential here that I just can’t cover in this blog. But I hope you’re starting to see just how incredibly powerful and versatile this technology really is.

So, where does this leave us in terms of the future of AI?

Well, it’s pretty obvious to me that Google is likely going to give GPT-4 and maybe even GPT-5 a real challenge in the coming years with this multi-modal approach. This also means we’re likely to see more applications and services that use Gemini’s capabilities to provide better user experiences and solutions.

For instance, we could see more personalized assistants that can understand and respond to us in different modalities or maybe more creative tools that can help us generate new content or ideas in different modalities. Alright guys, those are my thoughts on Google’s Gemini. And just to be clear, I’m not some crazy fan of Google or anything. I’m just sharing my opinions based on the research, reading, and observations I’ve made.

I hope this blog has been informative and you’ve picked up something new today. If you did, I’d appreciate it if you could give it a thumbs up, thanks for listening, and catch you in the next blog.